Sometimes, the simplest solutions are the most effective. This applies to everyday life problems as well as technical ones.

Today, I’ll share a common challenge we faced at our newly developed platform, namastedev. This solution not only improved our application’s performance but also ensured a smoother experience for our users. So, take a few minutes to read through, as it’s bound to be worthwhile. And if you have any suggestions for improvement, feel free to share them with us.

Every day, thousands of students visit the namastedev platform to access video content on various tech topics. With both premium and non-premium content available, we partnered with a third-party service to secure our premium videos through DRM (Digital Rights Management) protection. This meant integrating their video player into our client-side, requiring users to obtain a special token from this service to access premium content.

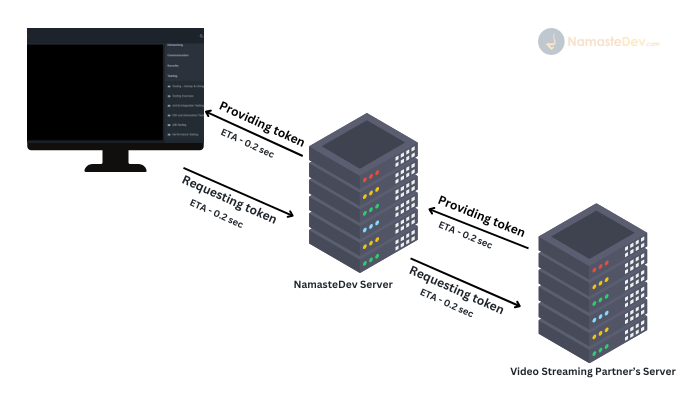

Whenever a user clicked on a video, an API call is made to our server at “ourdomain.com/course/:courseSlug/:learningResSlug”. At the backend, we verify the user’s authentication and eligibility to watch the video. For premium content, we initiated a server call to the third-party DRM service to obtain a token, which was then forwarded to the user’s browser for video playback.

As depicted in the image above, the process involved a client requesting a token from namastedev’s server, which in turn requested it from the video Streaming Partner’s Server. This process incurred a delay, especially if network calls took time to reach our server, resulting in approximately 0.6 seconds to acquire a token.

While this setup was okay initially, later monitoring our API latency revealed that this particular API was slower than expected. Thus, our focus shifted to optimizing the latency for video watching APIs.

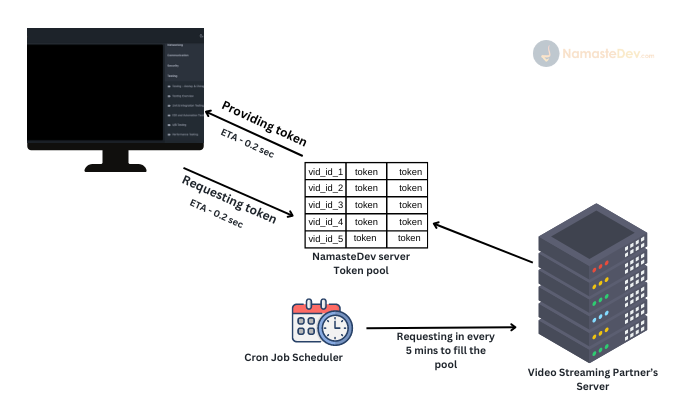

Excited by the prospect of enhancing user experience, we conceived the idea of a token pool. Although our streaming partner didn’t offer an API for token pooling, we decided to manage it internally. So we decided to manage an empty object in-memory, like so: let TOKEN_POOL = {}; and as soon as our node server starts, we call the initializeTokenPool() function. Within the initializeTokenPool() function, we maintain a hardcoded list of the most frequently watched video IDs. Furthermore, in this function, we fetch 100 tokens for each of the most commonly watched videos and save them into the TOKEN_POOL object. So, after the execution of initializeTokenPool(), our TOKEN_POOL looks something like this:

TOKEN_POOL = {

video_id_1: ['token1', 'token2', 'token3' ... 'token100'],

video_id_2: ['token1', 'token2', 'token3' ... 'token100'],

video_id_3: ['token1', 'token2', 'token3' ... 'token100'],

video_id_4: ['token1', 'token2', 'token3' ... 'token100'],

...

}

Now, the TOKEN_POOL contains tokens for the most commonly watched videos. When a user requests a token for a specific video, we first check our TOKEN_POOL for that video_id. If the video_id is found, we simply pop() a token and return it. However, if the video_id is not available in the TOKEN_POOL, we take two actions: first, we add that video_id to the TOKEN_POOL with an empty array (TOKEN_POOL[video_id] = []); second, we directly obtain a token from our video streaming partner and return it.

To ensure the token pool remained adequately stocked, we implemented a cron job to refill it every 5 minutes, maintaining a minimum of 100 tokens for each video.

Previously, it took about 0.6 seconds for a user to acquire a token to play the video. Now, with the token pool, users receive the token in just 0.04 seconds, as it’s readily available on our server without the need for an extra call.

After implementing this solution, we checked our latency graphs and were amazed with the results. Before the pool system, the average API latency was around 0.6 seconds. However, after implementation, it decreased dramatically to 0.04 seconds, marking a staggering 93.33% decrease. This significant improvement has undoubtedly enhanced the seamless experience for our users.

7 Comments

Such a good read , thank you for writing this.

Small Suggestion : if possible please add something like clap or likes. Which will motivate the publisher to publish more blogs and contenet and can also get the reactions of reader

1

Thanks for sharing this.. Again some new thing i learned here.

A small suggestion:- if possible make some video on these type of issue u resolved as a case study so we will have a deep dive.

It’s highly informative.

Small Suggestion : Could you please add bookmark feature so that we can save useful posts for future references.

Thanks akshay for the information. learned so much from you…..

1

@akshay, this solution is good but this solution will take up space in ram which will increase gradually also. Don’t you think so