Why Understanding Algorithm is More critical Than Ever

In today’s tech-driven world, mastering Data Structures and Algorithms (DSA) has become a fundamental skill for any aspiring software developer or engineer. Whether you’re preparing for coding interviews, competitive programming, or solving real-world problems, DSA forms the backbone of efficient and scalable solutions. In fact, the ability to understand and analyze the algorithm you write is often more critical than just solving a problem. Why? Because it’s not just about finding a solution — it’s about finding the most optimal one.

As companies handle growing volumes of data, the efficiency of algorithms in terms of time and space complexity is crucial. That’s why optimizing code performance is often more important than just solving the problem.

So This Could Be the Right One to Know Everything

What is Time Complexity?

Time complexity is a way to describe how the runtime of an algorithm increases as the input size grows. In simple terms, it tells us how much time an algorithm takes to run depending on the amount of data we give it.

Analogy: Think of it like ordering at a fast-food restaurant. If there’s only one person ahead of you, you’ll get your food quickly. But if there’s a long line of 50 people, it will take much longer to get your food. The length of the line represents the size of the input, and the time it takes to get your order is the time complexity. This illustrates the concept of time complexity: as the input (number of people) grows, the time required for the algorithm here:(order) typically increases as will.

Common Misconceptions About Time Complexity

While time complexity is a powerful tool for analyzing algorithms, there are several misconceptions that beginners often have:

- “If it works, it’s good enough”: Many new developers assume that if their code produces the correct output, it’s a good solution. However, correctness alone isn’t sufficient. For example, a brute-force solution might work for small inputs but become impractically slow for large datasets.

- “Fast code is always better”: Just because an algorithm runs quickly for small inputs doesn’t mean it will scale well. For instance, an O(n²) algorithm might seem fast when n is 10, but when n grows to 1,000, the runtime could skyrocket.

- “Time taken by the code execution is the same as time complexity”: Many beginners mistakenly believe that the actual time taken for code execution directly reflects its time complexity. However, this is not the case. Various factors, such as CPU speed and system architecture, can affect execution time, making it distinct from the theoretical measure of time complexity.

Understanding time complexity and recognizing common misconceptions are essential for developing efficient algorithms. By grasping these concepts, developers can create solutions that not only work correctly but also perform well under varying input sizes.

Steps to Compute Time Complexity

When computing time complexity, especially as a beginner, there are three key principles to follow:

1. Always Consider the Worst-Case Scenario: Worst case refers to the situation where the algorithm performs the maximum number of steps. This is crucial because it gives you the upper bound of how long an algorithm could take, even in the most challenging cases.

- For example, if you’re searching for an element in a list, the worst case is when the element is at the very end (or not found at all), requiring the algorithm to check every single item.

- Why worst case? It ensures we’re prepared for the most time-consuming situation, which is essential for evaluating efficiency.

2. Avoid ConstantsAvoiding constants: means we focus on how the algorithm scales with large inputs rather than small fixed values. Constants (like +1, +2, or multiplying by a small number) don’t significantly impact performance when input size becomes large.

- Example: Consider an algorithm that takes 2n + 3 steps. While “2” and “3” are constants, they matter very little when n becomes extremely large, so we simplify the complexity to just O(n).

- Relating it to the analogy: If you’re waiting in line with 50 people, whether you’re delayed by 2 or 3 extra seconds doesn’t matter much — it’s the total number of people (input size) that determines how long you’ll wait.

3. Avoid Lower ValuesWhen computing time complexity, we focus on large input sizes, as that’s when the efficiency of an algorithm really matters. Small inputs might not reveal the true scaling behavior of your code.

- For example, an algorithm with complexity O(n²) might seem fast for small inputs like n = 5, but as n grows (e.g., to 1,000), the difference between O(n) and O(n²) becomes dramatic.

- Relating it to the analogy: If only 3 people are ahead of you, the time complexity might not seem like a concern. But when there are 50 or 100 people in line, the way the algorithm handles larger inputs is what makes a real difference.

Step 2 helps you simplify time complexity by ignoring small constants in the expression.

Step 3 encourages you to analyze the algorithm’s performance with large input sizes rather than focusing on small, trivial cases.

Best, Average, and Worst Case in Algorithms

When analyzing algorithms, we consider three possible scenarios for performance and three notations:

Big O, omega, theta

- Best Case (Ω Notation): This is when an algorithm performs its fastest. For example, in a linear search, if the target element is the first in the list, the search ends in constant time, which is Ω(1).

- Average Case (Θ Notation): This is the expected performance, typically when inputs are random. For instance, finding an element in the middle of a list takes around half the time, making it Θ(n/2), but simplified to Θ(n).

- Worst Case (O Notation): This scenario assumes the most time-consuming input, like searching for the last element or not finding it at all. This makes the time complexity O(n) in linear search.

Why Do We Use Big O? Worst-Case Guarantees: Big O ensures that no matter the input, the algorithm won’t perform worse than expected.

Scalability: Focusing on worst-case scenarios helps ensure that your algorithm can handle large and unpredictable datasets effectively.

Practicality: While average and best-case scenarios (Θ and Ω) are useful, they aren’t always reliable in real-world applications, where ensuring worst-case performance is crucial.

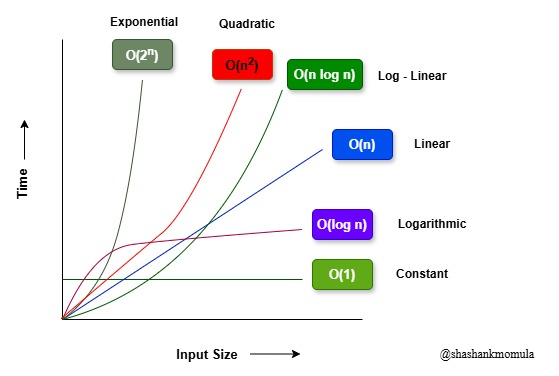

Types of Time Complexities

Time complexity helps us understand how the runtime of an algorithm grows with input size. The most common types of time complexities, in order of their efficiency from best to worst, are:

- Constant Time – O(1)

- Logarithmic Time – O(log n)

- Linear Time – O(n)

- Linearithmic Time – O(n log n)

- Quadratic Time – O(n²)

- Exponential Time – O(2ⁿ)

1. Constant Time – O(1)

- Definition: The runtime of the algorithm does not change with the size of the input. It always takes the same amount of time, no matter how large or small the input is.

- Example: Accessing an element in an array by its index.

int getFirstElement(int arr[], int n){

//Always takes the constant time to access the first element.

return arr[0];

}

No matter how large the array is, accessing the first element always takes constant time.

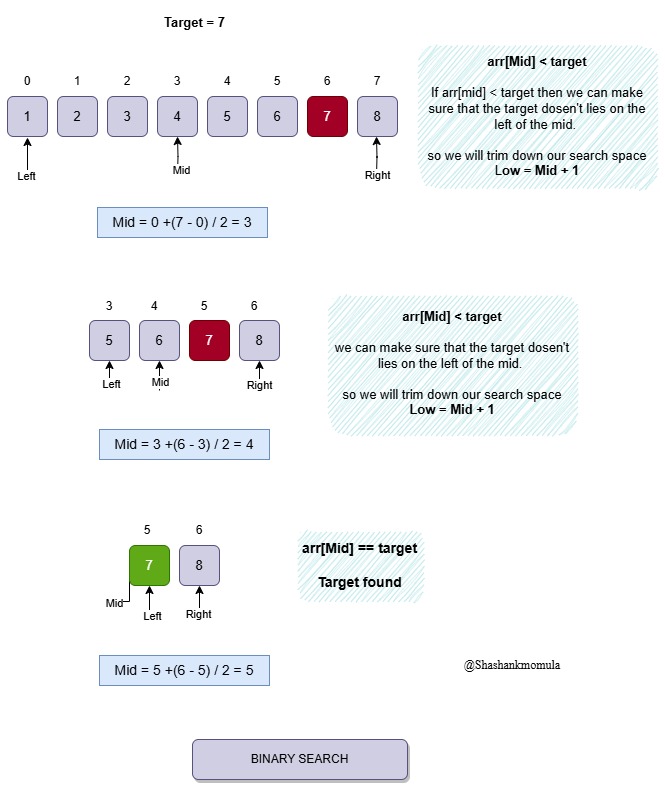

2. Logarithmic Time – O(log n)

- Definition: Logarithmic time complexity occurs when an algorithm’s runtime increases much more slowly than the input size. This typically happens when the algorithm repeatedly divides the input size in half to find a solution.

- Mathematical Insight: For an input size of n, binary search completes in log₂(n) steps. Searching through 16 elements takes 4 steps, and for 1,000 elements, it takes around 10 steps, which is significantly faster than linear search.

why is it called Logarithmic?

The number of operations grows logarithmically because the problem space is reduced exponentially with each step. For example, binary search splits the search space in half at every step.

Real-World Analogy

Imagine finding a word in a dictionary. Instead of checking every word one by one, you flip to the middle, decide if the word is before or after, and then repeat by halving the search space. This is why binary search operates in O(log n) time — a far more efficient approach for large datasets.

Eample: Binary Search Algorithm

Target : 7

int binarySearch(int arr[], int n, int target){

int left = 0, right = n-1; //O(1)

while(left<=right){

// O(log n): The loops run logarithmically based on the size of input

int mid = left+(right-left)/2; //O(1)

if(arr[mid] == target) return mid;

else if(arr[mid] < target) left = mid+1;

else right = mid-1;

}

return -1; //O(1)

}

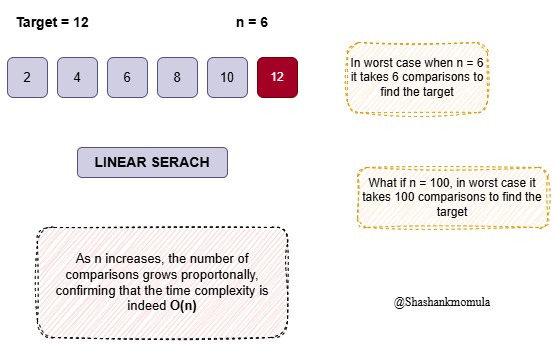

3. Linear Time – O(n)

- Definition: Linear time complexity, denoted O(n), means that the runtime grows directly in proportion to the input size. if the input doubles, the time it takes to complete the task also doubles.

Example: Linear Search Algorithm

A linear search algorithm is a classic example of this. It checks each element in an array one by one until it finds the target value.

int linearSearch(int arr[], int n, int target){

for(int i=0; i<n; i++){ //O(n) Iterating through all elements

if(arr[i] == target){ // O(1) checking the current element

return i;

}

}

return -1; //O(1) if target is not found

}

Why is it O(n)?

In the worst case, the algorithm checks all n elements before finding the target or concluding it’s not present. Thus, the number of operations increases linearly with the input size.

Real-World Analogy:

Imagine searching for a book in a library, checking each book one by one. If there are 100 books, you might check all 100; if there are 1,000 books, it could take up to 1,000 checks. This reflects the linear growth of time with the input size.

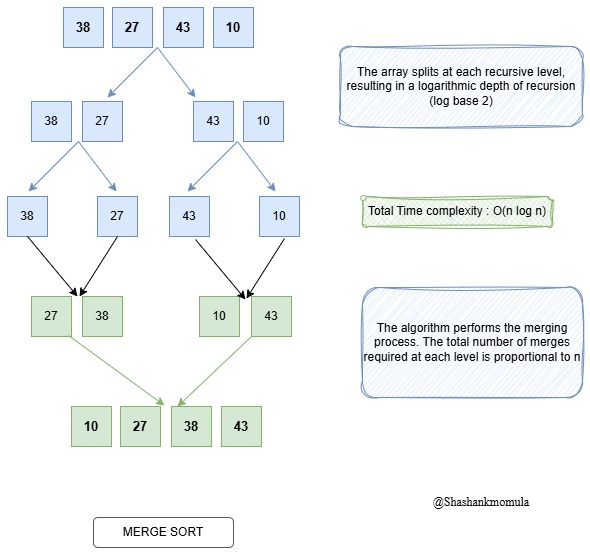

4. Linearithmic Time – O(n log n)

- Definition: Linearithmic time complexity, denoted as O(n log n), means the runtime grows in proportion to n times the logarithm of n. This complexity often shows up in efficient algorithms that involve both dividing a problem and processing each part. You may wonder: why n, why log n, and how do these combine? Let’s explore with a real-world analogy for better understanding.

Real-World Analogy for O(n log n) – Sorting Jumbled ID Cards of a Class

Imagine you have 50 jumbled ID cards, and your task is to arrange them in order by student number. Sorting all 50 cards at once would be overwhelming, so you decide to use a divide-and-conquer approach, similar to how merge sort works.

Dividing the ID Cards – O(log n):

First, you repeatedly divide the cards into smaller groups. Split 50 cards into two piles of 25, then split those again, and so on until you have single cards.

Each time you halve the pile, you’re reducing the problem size, taking log n steps. For example:

50 cards → 25 cards → 12 cards → 6 → 3 → 1.

This step takes O(log n) because you’re dividing the cards in half until you can’t divide anymore.

Sorting and Merging – O(n):

Now that each card is a single pile, you start merging.

At each step, you compare and combine two piles of cards in the correct order. To merge two piles, you need to touch each card once, making this step O(n), where n is the total number of cards.

Why Does This Process Take O(n log n)?

O(log n) comes from the repeated division of the cards into smaller groups.

O(n) comes from the merging process, where you sort and combine each card back together.

In short, you’re dividing the problem into smaller pieces in log n steps, and for each division, you’re sorting all the cards, making the overall complexity O(n log n).

Now let’s look at how this works in an actual algorithm with the merge sort code:

void mergeSort(int arr[], int l, int r){

if(l<r){

int m = l+(r-l)/2; // Find the middle

mergeSort(arr,l,m); // Sort the first half

mergeSort(arr,m+1,r); // Sort the second half

merge(arr,l,m,r); // Merge the sorted halves

}

}

When you first look at the merge sort code, you might wonder, What is mergeSort()? What is merge()? Don’t worry! It’s just a sorting algorithm that breaks the array into smaller parts, sorts them, and then merges them back together.

To fully understand how this works, take a moment to explore it for yourself. Here’s a simple breakdown:

- mergeSort() is the function that splits the array into smaller parts until each part is a single element.

- merge() is the function that combines those single elements (or smaller arrays) back together in sorted order.

Once you start diving deeper into it, you’ll find merge sort to be an elegant and efficient way of sorting!

Give it a try, and you’ll see it’s not as scary as it looks!

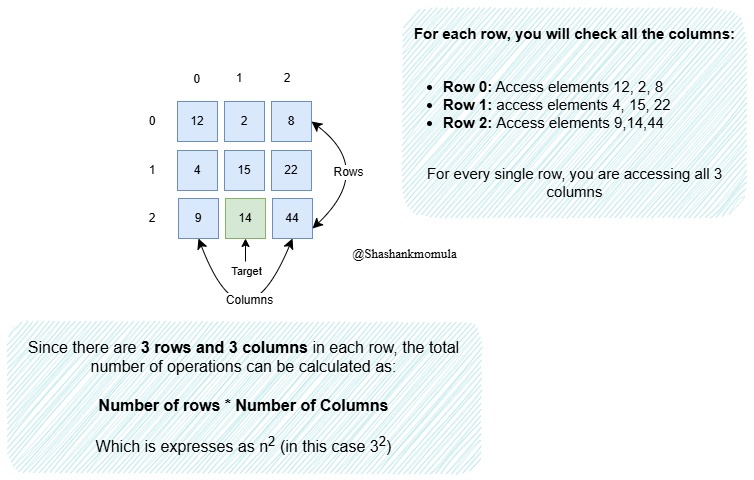

5. Quadratic Time – O(n^2)

- Definition: Quadratic time complexity, denoted as O(n^2), means that the runtime grows in proportion to the square of the input size. This is common in algorithms where each element needs to be compared or processed with every other element, often leading to nested loops.

Example: Accessing Elements in an n×n Matrix(2D)

Imagine you have an n×n grid (like a matrix), and you need to perform some operation on each cell in the grid or you need to find a target element, one by one. Since there are n rows and n columns, this results in n×n = n^2 operations in total, as every element in each row is accessed once per column.

bool findElement(int matrix[3][3], int target){

for(int i=0;i<3;i++){ //outer loops iterates over rows

for(int j=0;j<3;j++){ //inner loop iterates over columns

if(matrix[i][j] == target){

return true;

}

}

return false;

}

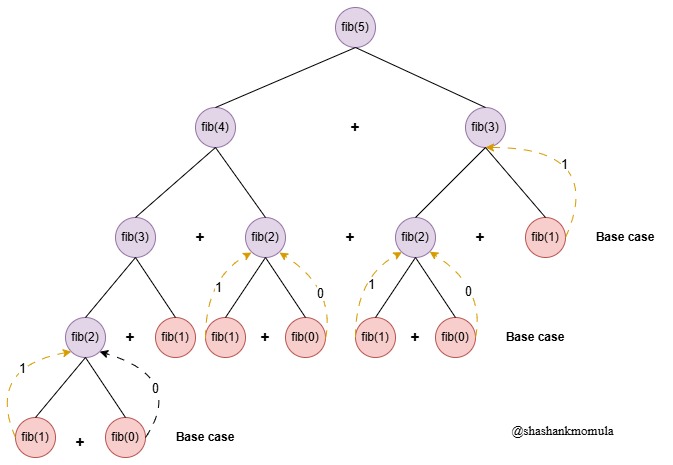

6. Exponential Time Complexity – O(2^n)

Exponential time complexity, denoted as O(2^n), describes an algorithm where the runtime doubles with each additional input element. This complexity is among the slowest and quickly becomes unfeasible for even moderately large inputs, often appearing in recursive algorithms that branch into multiple subproblems — such as the naive recursive solution to the Fibonacci sequence.

Example: Calculating the Fibonacci Sequence (Recursive Approach)

In the Fibonacci sequence, each number is the sum of the previous two. A simple recursive approach to calculate this follows:

int fibonacci(int n){

if(n<=1)return n;

return fibonacci(n-1)+fibonacci(n-2);

}

In this code, each call to fibonacci(n) branches into two further calls for fibonacci(n-1) and fibonacci(n-2), resulting in a branching factor of 2. For an input size of n, the total number of recursive calls grows roughly as 2^n.

Why Exponential?

Each recursive call leads to two more, making the process double with every increase in input size. For example, calculating fibonacci(5) is manageable, but fibonacci(40) would require over a billion calls due to the exponential growth.

Real-World Analogy: Folding a Piece of Paper

Imagine folding a piece of paper in half. The thickness doubles with each fold: 2 layers on the 1st fold, 4 on the 2nd, 8 on the 3rd, and so on. After 20 folds, the paper would have over a million layers! Similarly, exponential algorithms quickly become impractical, as their growth doubles with every additional input. Just as you can only fold a paper so many times, exponential algorithms rapidly become unmanageable with even small increases in input size.

- O(1) – Constant Time: The time remains constant regardless of the input size, making it the fastest and most efficient time complexity. Examples include accessing an element by index in an array.

- O(log n) – Logarithmic Time: As the input size increases, the time grows very slowly. This is often seen in algorithms that repeatedly halve the input, like binary search.

- O(n) – Linear Time: The time complexity grows linearly with the input size. For example, a single loop iterating over n elements takes O(n) time.

- O(n^2) – Quadratic Time: The time complexity grows quadratically, often seen in algorithms with nested loops. Sorting algorithms like bubble sort have this complexity.

- O(2^n) – Exponential Time: The time grows extremely fast as n increases, making it impractical for large inputs. Recursive algorithms that solve all subsets, such as solving the traveling salesman problem, have this complexity.

From this comparison, O(1) and O(log n) are the most efficient, while O(n^2) and O(2^n) should generally be avoided for large inputs due to their steep growth rate. Choosing the right algorithm with a lower time complexity significantly impacts performance, especially as the input size grows.

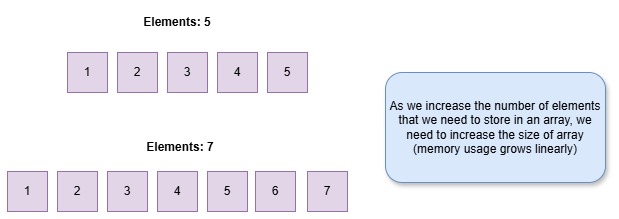

What is Space complexity?

Space complexity measures the extra memory an algorithm needs to run efficiently, calculated as a function of the input size. It helps us estimate how much storage an algorithm will use by analyzing the memory needed for components like variables, data structures, function calls, and temporary storage.

Components of Space Complexity

Fixed Memory Requirements:

- Constants: Fixed values like configuration settings that do not change with input size.

- Primitive Variables: Basic counters or indexes, which remain constant regardless of input.

Variable Memory Requirements:

- Data Structures: Memory for structures like arrays or lists, which grow with the input.

- Function Call Stack: For recursive algorithms, each call adds to the stack until all calls are resolved.

- Temporary Storage: Memory for intermediate results, such as buffers or temporary arrays.

Real-World Analogy: Packing a Suitcase

Imagine packing a suitcase:

- Essentials vs. Extras: Essentials (core memory) are always needed, while extras represent additional memory for optional tasks.

- Efficient Packing: Like minimal packing, using only necessary memory keeps the algorithm efficient.

- Limited Capacity: Just as a suitcase has limited space, computers have finite memory; using memory efficiently is crucial.

Example: Reversing an Array

To reverse an array, a naive approach is to create a new array to store elements in reverse order, which requires extra memory proportional to the input size, giving an O(n) space complexity. For large inputs, this approach could be inefficient due to increased memory usage.

In summary, space complexity is essential for understanding and minimizing the memory requirements of an algorithm, particularly in environments where memory is limited.

- O(1) – Constant Space Complexity: The algorithm uses a fixed amount of memory regardless of the input size. Examples include algorithms that only use a few variables, like swapping two numbers.

- O(n) – Linear Space Complexity: The memory usage grows linearly with the input size. For example, storing n elements in an array or a list requires O(n) space.

- O(n²) – Quadratic Space Complexity: The space required increases quadratically with the input size, such as a 2D array of size n x n. This type of complexity can quickly become inefficient as input size grows.

Conclusion

Optimizing both time and space complexity is crucial for building efficient algorithms. By understanding and selecting the right time and space complexity, you can make your algorithms faster and more memory-efficient, leading to smoother, more scalable applications.

Optimizing your code is like sharpening a tool — the sharper it is, the better it performs. Aim for efficiency, and let your algorithms do more with less.

1,221 Comments

Really enjoyed this article post.Thanks Again.

does rx pharmacy coupons work pharmacy technician requirements in canada

An interesting discussion is worth comment. I assume that you ought to write a lot more on this subject, it may not be a forbidden topic however normally people are not enough to talk on such topics. To the next. Cheers

Awesome things here. I am very happy to see your post. Thanks a lot and I’m looking forward to touch you. Will you kindly drop me a mail?

ivermectin walmart ivermectin pour-on tractor supply

does ivermectin kill scabies ivermectin for scabies dosage

Thanks for the blog.Really thank you! Cool.

wow, awesome post.Really thank you! Fantastic.

Thanks-a-mundo for the article post.Thanks Again. Much obliged.

Very informative blog article.Really looking forward to read more. Great.

Thanks-a-mundo for the article post.Really looking forward to read more. Want more.

Great artical, had no problems printing this page either.

Have you ever heard of second life (sl for short). It is essentially a online game where you can do anything you want. SL is literally my second life (pun intended lol). If you would like to see more you can see these sl authors and blogs

La disfunción eréctil es un problema que afecta a millones de hombres en todo el mundo, y que tiene una gran repercusión en su calidad de vida y en la de sus parejas.

There is perceptibly a lot to identify about this. I think you made certain nice points in features also.

These are genuinely wonderful ideas in about blogging.You have touched some pleasant factors here. Any way keep up wrinting.

Thank you ever so for you blog article. Great.

Great blog article. Will read on…

A motivating discussion is worth comment. I do believe that you need to write more on this issue, it might not be a taboo matter but typically people don’t discuss such issues. To the next! Many thanks!!

Appreciate you sharing, great article post.Really looking forward to read more. Really Great.

Im obliged for the post.Thanks Again. Keep writing.

It’s actually a great and useful piece of info. I am glad that you shared this useful information with us. Please stay us up to date like this. Thanks for sharing.

Hey There. I found your blog using msn. This is a very well written article. I will make sure to bookmark it and return to read more of your useful info. Thanks for the post. I will certainly comeback.

biden hydroxychloroquine chloroquine primaquine

I value the post.Thanks Again. Keep writing.

Good day! I simply wish to offer you a big thumbs up for your great information you have got here on this post. I will be coming back to your blog for more soon.

Oh my goodness! Awesome article dude! Many thanks, However I am having difficulties with your RSS. I donít know why I cannot join it. Is there anybody having identical RSS issues? Anyone that knows the solution can you kindly respond? Thanks!!

I truly appreciate this article post.Much thanks again. Awesome.

top farmacia online: Farma Prodotti – Farmacia online piГ№ conveniente

farmacia online senza ricetta

Oh my goodness! Incredible article dude! Thanks, However I am having difficulties with your RSS. I donít understand why I can’t join it. Is there anyone else getting similar RSS issues? Anyone who knows the solution will you kindly respond? Thanx!!

Really appreciate you sharing this article.Much thanks again. Really Great.

Some genuinely fantastic information, Gladiola I found this.

viagra online consegna rapida [url=https://farmasilditaly.com/#]viagra senza ricetta[/url] viagra pfizer 25mg prezzo

Los juegos en vivo ofrecen emociГіn adicional.: jugabet – jugabet chile

Many casinos offer luxurious amenities and services. http://phmacao.life/# Resorts provide both gaming and relaxation options.

https://winchile.pro/# La competencia entre casinos beneficia a los jugadores.

Gambling regulations are strictly enforced in casinos.

Los casinos son lugares de reuniГіn social.: jugabet chile – jugabet

jugabet casino [url=http://jugabet.xyz/#]jugabet.xyz[/url] Las promociones atraen nuevos jugadores diariamente.

https://jugabet.xyz/# Las mГЎquinas tragamonedas tienen temГЎticas diversas.

The poker community is very active here.

Gambling can be a social activity here.: taya777 – taya777 register login

Casinos offer delicious dining options on-site.: taya777 register login – taya777 app

https://taya365.art/# Responsible gaming initiatives are promoted actively.

Many casinos offer luxurious amenities and services.

Casino promotions draw in new players frequently. https://phtaya.tech/# Most casinos offer convenient transportation options.

taya365 login [url=https://taya365.art/#]taya365[/url] Slot machines feature various exciting themes.

http://jugabet.xyz/# Algunos casinos tienen programas de recompensas.

Responsible gaming initiatives are promoted actively.

Casino visits are a popular tourist attraction. http://taya365.art/# Many casinos host charity events and fundraisers.

https://jugabet.xyz/# Los jugadores deben jugar con responsabilidad.

Some casinos have luxurious spa facilities.

The gaming floors are always bustling with excitement. https://taya777.icu/# Casinos offer delicious dining options on-site.

taya365 [url=http://taya365.art/#]taya365[/url] Many casinos provide shuttle services for guests.

Some casinos have luxurious spa facilities.: phmacao com login – phmacao casino

http://phtaya.tech/# Casino promotions draw in new players frequently.

Promotions are advertised through social media channels.

https://phmacao.life/# The casino experience is memorable and unique.

Casinos often host special holiday promotions.

Slot machines attract players with big jackpots. https://taya777.icu/# Casinos offer delicious dining options on-site.

Los casinos reciben turistas de todo el mundo.: jugabet chile – jugabet chile

https://phtaya.tech/# Gambling regulations are strictly enforced in casinos.

Entertainment shows are common in casinos.

winchile [url=http://winchile.pro/#]winchile.pro[/url] Las reservas en lГnea son fГЎciles y rГЎpidas.

Loyalty programs reward regular customers generously. http://taya777.icu/# Players must be at least 21 years old.

The Philippines has several world-class integrated resorts.: phtaya.tech – phtaya.tech

https://phtaya.tech/# Gaming regulations are overseen by PAGCOR.

Gambling regulations are strictly enforced in casinos.

Los croupiers son amables y profesionales.: winchile – winchile

Live dealer games enhance the casino experience. http://taya777.icu/# The Philippines has a vibrant nightlife scene.

Resorts provide both gaming and relaxation options.: taya365 – taya365 com login

https://taya365.art/# Resorts provide both gaming and relaxation options.

The Philippines has a vibrant nightlife scene.

Gambling regulations are strictly enforced in casinos. https://taya365.art/# The Philippines has a vibrant nightlife scene.

https://phtaya.tech/# Some casinos have luxurious spa facilities.

Security measures ensure a safe environment.

The casino atmosphere is thrilling and energetic.: phmacao – phmacao casino

https://taya777.icu/# Poker rooms host exciting tournaments regularly.

Cashless gaming options are becoming popular.

Security measures ensure a safe environment. http://phtaya.tech/# The casino experience is memorable and unique.

Los jugadores deben jugar con responsabilidad.: winchile – win chile

https://taya365.art/# Many casinos host charity events and fundraisers.

Slot machines feature various exciting themes.

Manila is home to many large casinos.: taya777 – taya777

Responsible gaming initiatives are promoted actively.: phmacao casino – phmacao club

taya365 [url=http://taya365.art/#]taya365[/url] Slot tournaments create friendly competitions among players.

Las redes sociales promocionan eventos de casinos.: winchile.pro – win chile

http://winchile.pro/# La adrenalina es parte del juego.

Cashless gaming options are becoming popular.

Las apuestas deportivas tambiГ©n son populares.: jugabet chile – jugabet.xyz

http://phtaya.tech/# Most casinos offer convenient transportation options.

Resorts provide both gaming and relaxation options.

Hay reglas especГficas para cada juego.: win chile – win chile

taya365 com login [url=https://taya365.art/#]taya365.art[/url] Players enjoy both fun and excitement in casinos.

http://winchile.pro/# La iluminaciГіn crea un ambiente vibrante.

Entertainment shows are common in casinos.

Many casinos provide shuttle services for guests.: phtaya.tech – phtaya casino

https://phmacao.life/# Visitors come from around the world to play.

The casino industry supports local economies significantly.

La iluminaciГіn crea un ambiente vibrante.: jugabet chile – jugabet.xyz

The gaming floors are always bustling with excitement.: taya777 app – taya777 login

taya777 app [url=https://taya777.icu/#]taya777 login[/url] Players enjoy a variety of table games.

Slot machines feature various exciting themes.: phtaya – phtaya login

Casino visits are a popular tourist attraction.: taya777 app – taya777 login

http://taya777.icu/# Slot machines attract players with big jackpots.

Many casinos provide shuttle services for guests.

my family essay writing – paper writing online help with term paper

http://taya777.icu/# Casinos often host special holiday promotions.

Promotions are advertised through social media channels.

The Philippines has a vibrant nightlife scene. http://phmacao.life/# Casino visits are a popular tourist attraction.

Casino promotions draw in new players frequently.: phmacao com – phmacao com login

The casino experience is memorable and unique.: taya777.icu – taya777 app

https://taya777.icu/# Responsible gaming initiatives are promoted actively.

Promotions are advertised through social media channels.

Las reservas en lГnea son fГЎciles y rГЎpidas.: winchile casino – winchile.pro

phtaya [url=https://phtaya.tech/#]phtaya[/url] The Philippines offers a rich gaming culture.

http://taya777.icu/# Gambling regulations are strictly enforced in casinos.

A variety of gaming options cater to everyone.

best india pharmacy: buy prescription drugs from india – MegaIndiaPharm

online pharmacy delivery usa: Best online pharmacy – online pharmacy delivery usa

cheapest pharmacy to fill prescriptions with insurance http://xxlmexicanpharm.com/# xxl mexican pharm

rx pharmacy coupons https://megaindiapharm.com/# MegaIndiaPharm

xxl mexican pharm: xxl mexican pharm – mexico drug stores pharmacies

pharmacy no prescription required https://discountdrugmart.pro/# discount drug mart pharmacy

Mega India Pharm: Mega India Pharm – mail order pharmacy india

canadian prescription pharmacy https://xxlmexicanpharm.com/# mexican pharmaceuticals online

canadian pharmacy meds reviews: easy canadian pharm – easy canadian pharm

canada pharmacy coupon http://familypharmacy.company/# canadian online pharmacy no prescription

best canadian pharmacy no prescription https://xxlmexicanpharm.com/# xxl mexican pharm

family pharmacy [url=https://familypharmacy.company/#]cheapest pharmacy for prescription drugs[/url] online pharmacy delivery usa

overseas pharmacy no prescription http://xxlmexicanpharm.com/# xxl mexican pharm

pharmacy coupons http://xxlmexicanpharm.com/# mexican pharmaceuticals online

xxl mexican pharm: mexico pharmacies prescription drugs – mexican mail order pharmacies

Online pharmacy USA: Online pharmacy USA – Cheapest online pharmacy

family pharmacy [url=https://familypharmacy.company/#]family pharmacy[/url] online canadian pharmacy coupon

prescription drugs from canada https://megaindiapharm.shop/# MegaIndiaPharm

canada online pharmacy no prescription https://easycanadianpharm.shop/# easy canadian pharm

mexican mail order pharmacies: п»їbest mexican online pharmacies – mexico pharmacies prescription drugs

drug mart: discount drug pharmacy – discount drug mart pharmacy

prescription drugs online https://easycanadianpharm.com/# easy canadian pharm

no prescription pharmacy paypal http://discountdrugmart.pro/# discount drug pharmacy

xxl mexican pharm: xxl mexican pharm – mexican drugstore online

no prescription needed canadian pharmacy http://discountdrugmart.pro/# drug mart

easy canadian pharm: canadian pharmacy reviews – canada pharmacy world

buying prescription drugs from canada http://familypharmacy.company/# Cheapest online pharmacy

international pharmacy no prescription https://discountdrugmart.pro/# drug mart

xxl mexican pharm: xxl mexican pharm – mexican pharmaceuticals online

canadian pharmacy no prescription https://megaindiapharm.com/# Mega India Pharm

canadian pharmacy world coupon https://familypharmacy.company/# Online pharmacy USA

Heya i’m for the first time here. I found this board and I find It really useful &it helped me out much. I hope to give something back and help others like you aided me.Review my blog post; Pure Optimum Keto Burn

xxl mexican pharm [url=https://xxlmexicanpharm.shop/#]best online pharmacies in mexico[/url] xxl mexican pharm

easy canadian pharm: easy canadian pharm – easy canadian pharm

us pharmacy no prescription https://discountdrugmart.pro/# canadian pharmacy without prescription

online pharmacy delivery usa: Cheapest online pharmacy – family pharmacy

I am perpetually thought about this, appreciate itfor posting.Feel free to surf to my blog … Viag Rx

reputable online pharmacy no prescription https://familypharmacy.company/# family pharmacy

easy canadian pharm: easy canadian pharm – canadadrugpharmacy com

buying prescription drugs in mexico: xxl mexican pharm – mexican drugstore online

discount drugs [url=http://discountdrugmart.pro/#]discount drugs[/url] drugmart

Cheapest online pharmacy: family pharmacy – Online pharmacy USA

online pharmacy delivery usa: online pharmacy delivery usa – Online pharmacy USA

best online pharmacy no prescription https://familypharmacy.company/# family pharmacy

canadian pharmacy coupon code https://megaindiapharm.shop/# cheapest online pharmacy india

rxpharmacycoupons https://megaindiapharm.com/# Mega India Pharm

xxl mexican pharm: xxl mexican pharm – buying prescription drugs in mexico online

overseas pharmacy no prescription https://xxlmexicanpharm.com/# mexican border pharmacies shipping to usa

You only have yourself to blame for not asking for a lot more from the get-go.

canadian neighbor pharmacy [url=http://easycanadianpharm.com/#]easy canadian pharm[/url] easy canadian pharm

international pharmacy no prescription https://discountdrugmart.pro/# discount drug mart pharmacy

easy canadian pharm: onlinepharmaciescanada com – easy canadian pharm

MegaIndiaPharm: MegaIndiaPharm – pharmacy website india

no prescription required pharmacy https://familypharmacy.company/# family pharmacy

Best online pharmacy: Online pharmacy USA – Cheapest online pharmacy

online pharmacy discount code https://xxlmexicanpharm.shop/# purple pharmacy mexico price list

discount drug mart pharmacy: drugmart – canadian pharmacy world coupon

online canadian pharmacy review [url=https://easycanadianpharm.com/#]easy canadian pharm[/url] canadian pharmacy sarasota

canada pharmacy coupon http://discountdrugmart.pro/# drugmart

xxl mexican pharm: xxl mexican pharm – xxl mexican pharm

Cheapest online pharmacy: family pharmacy – family pharmacy

non prescription medicine pharmacy https://familypharmacy.company/# online pharmacy delivery usa

drug mart: discount drug mart pharmacy – online pharmacy no prescription needed

discount drug mart pharmacy [url=https://discountdrugmart.pro/#]discount drug mart pharmacy[/url] canadian pharmacy no prescription

rx pharmacy coupons https://xxlmexicanpharm.shop/# mexican pharmaceuticals online

online pharmacy no prescription https://discountdrugmart.pro/# drugmart

Mega India Pharm: Mega India Pharm – MegaIndiaPharm

cheapest pharmacy to fill prescriptions without insurance http://discountdrugmart.pro/# canadian pharmacy without prescription

canadian pharmacy world coupon code https://familypharmacy.company/# family pharmacy

Cheapest online pharmacy [url=https://familypharmacy.company/#]cheapest pharmacy for prescriptions without insurance[/url] online pharmacy delivery usa

easy canadian pharm: easy canadian pharm – easy canadian pharm

lisinopril ibuprofen does lisinopril cause dry mouth

rx pharmacy no prescription https://discountdrugmart.pro/# discount drugs

canadian pharmacy no prescription https://megaindiapharm.com/# indian pharmacy paypal

Online pharmacy USA: Online pharmacy USA – family pharmacy

canadian pharmacy coupon code http://familypharmacy.company/# Online pharmacy USA

canadian pharmacy no prescription needed http://discountdrugmart.pro/# canadian pharmacy no prescription needed

discount drugs: drugmart – drug mart

best no prescription pharmacy https://easycanadianpharm.com/# canada drug pharmacy

canada pharmacy not requiring prescription https://xxlmexicanpharm.com/# xxl mexican pharm

reputable canadian online pharmacy: easy canadian pharm – easy canadian pharm

https://garuda888.top/# Mesin slot dapat dimainkan dalam berbagai bahasa

Banyak pemain menikmati jackpot harian di slot http://preman69.tech/# Slot dengan pembayaran tinggi selalu diminati

bonaslot [url=http://bonaslot.site/#]BonaSlot[/url] Banyak kasino memiliki program loyalitas untuk pemain

Slot menawarkan kesenangan yang mudah diakses https://preman69.tech/# Kasino selalu memperbarui mesin slotnya

Mesin slot sering diperbarui dengan game baru: slot88 – slot 88

http://bonaslot.site/# Kasino sering memberikan hadiah untuk pemain setia

Mesin slot menawarkan pengalaman bermain yang cepat http://garuda888.top/# Kasino menyediakan layanan pelanggan yang baik

Kasino selalu memperbarui mesin slotnya http://garuda888.top/# Permainan slot bisa dimainkan dengan berbagai taruhan

Kasino mendukung permainan bertanggung jawab: BonaSlot – bonaslot

https://slot88.company/# Jackpot besar bisa mengubah hidup seseorang

slot 88 [url=https://slot88.company/#]slot88.company[/url] Slot memberikan kesempatan untuk menang besar

Pemain sering berbagi tips untuk menang: slotdemo – slot demo gratis

Beberapa kasino memiliki area khusus untuk slot http://slot88.company/# Slot modern memiliki grafik yang mengesankan

п»їKasino di Indonesia sangat populer di kalangan wisatawan: akun demo slot – slotdemo

http://slotdemo.auction/# Permainan slot bisa dimainkan dengan berbagai taruhan

preman69 slot [url=http://preman69.tech/#]preman69[/url] Slot menawarkan kesenangan yang mudah diakses

Beberapa kasino memiliki area khusus untuk slot https://slot88.company/# Banyak pemain berusaha untuk mendapatkan jackpot

Mesin slot menawarkan berbagai tema menarik: slot 88 – slot 88

https://slot88.company/# п»їKasino di Indonesia sangat populer di kalangan wisatawan

Pemain harus menetapkan batas saat bermain http://slotdemo.auction/# Kasino di Indonesia menyediakan hiburan yang beragam

Keseruan bermain slot selalu menggoda para pemain http://bonaslot.site/# Pemain sering berbagi tips untuk menang

Beberapa kasino memiliki area khusus untuk slot: bonaslot – bonaslot

Mesin slot baru selalu menarik minat https://bonaslot.site/# Kasino selalu memperbarui mesin slotnya

http://garuda888.top/# Banyak kasino memiliki program loyalitas untuk pemain

http://preman69.tech/# Pemain bisa menikmati slot dari kenyamanan rumah

Thẳng Bóng Đá Thời Điểm Hôm Naycúp bóng đá nữ toàn cầuNếu cứ đùa như cách vừa tiêu diệt Everton cho tới 3-1 bên trên sảnh khách

Slot dengan tema film terkenal menarik banyak perhatian http://garuda888.top/# Banyak pemain mencari mesin dengan RTP tinggi

visit my site https://rocket-pool.us/

Kasino di Indonesia menyediakan hiburan yang beragam: slot88 – slot88.company

garuda888 [url=http://garuda888.top/#]garuda888.top[/url] Mesin slot baru selalu menarik minat

Slot menawarkan berbagai jenis permainan bonus https://preman69.tech/# Kasino di Jakarta memiliki berbagai pilihan permainan

Mesin slot digital semakin banyak diminati https://bonaslot.site/# Kasino di Jakarta memiliki berbagai pilihan permainan

Kasino menawarkan pengalaman bermain yang seru: akun demo slot – slot demo

http://preman69.tech/# Slot menjadi bagian penting dari industri kasino

Kasino memastikan keamanan para pemain dengan baik https://slot88.company/# Pemain bisa menikmati slot dari kenyamanan rumah

garuda888.top [url=https://garuda888.top/#]garuda888 slot[/url] Mesin slot dapat dimainkan dalam berbagai bahasa

https://preman69.tech/# Slot dengan bonus putaran gratis sangat populer

Mesin slot sering diperbarui dengan game baru https://slotdemo.auction/# Banyak kasino menawarkan permainan langsung yang seru

Kasino memiliki suasana yang energik dan menyenangkan https://slotdemo.auction/# Banyak pemain menikmati bermain slot secara online

bonaslot.site [url=http://bonaslot.site/#]BonaSlot[/url] Mesin slot sering diperbarui dengan game baru

https://garuda888.top/# Pemain sering berbagi tips untuk menang

Jackpot progresif menarik banyak pemain: garuda888.top – garuda888 slot

Banyak pemain berusaha untuk mendapatkan jackpot http://slot88.company/# Kasino di Bali menarik banyak pengunjung

https://slotdemo.auction/# Kasino menawarkan pengalaman bermain yang seru

nice article, have a look at my

Permainan slot bisa dimainkan dengan berbagai taruhan: slot 88 – slot 88

BonaSlot [url=https://bonaslot.site/#]BonaSlot[/url] Beberapa kasino memiliki area khusus untuk slot

Banyak pemain menikmati jackpot harian di slot http://preman69.tech/# Pemain bisa menikmati slot dari kenyamanan rumah

These are really impressive ideas in about blogging.You have touched some nice things here. Any way keep up wrinting.

nice article ave a look at my site “https://www.newsbreak.com/crypto-space-hub-313321940/3799652652916-top-crypto-investments-in-2025-bitcoin-ai-projects-tokenized-assets”

https://garuda888.top/# Kasino memiliki suasana yang energik dan menyenangkan

Mesin slot dapat dimainkan dalam berbagai bahasa https://preman69.tech/# Slot klasik tetap menjadi favorit banyak orang

https://garuda888.top/# Slot menawarkan kesenangan yang mudah diakses

https://spookyswap-r-2.gitbook.io/en-us/

Permainan slot mudah dipahami dan menyenangkan https://bonaslot.site/# Mesin slot menawarkan berbagai tema menarik

bonaslot [url=https://bonaslot.site/#]bonaslot[/url] Pemain bisa menikmati slot dari kenyamanan rumah

Slot dengan tema budaya lokal menarik perhatian: slot88 – slot 88

Slot dengan tema budaya lokal menarik perhatian http://bonaslot.site/# Mesin slot digital semakin banyak diminati

https://bonaslot.site/# Slot menjadi bagian penting dari industri kasino

Kasino di Jakarta memiliki berbagai pilihan permainan https://preman69.tech/# Slot dengan tema budaya lokal menarik perhatian

Mesin slot menawarkan berbagai tema menarik: preman69.tech – preman69 slot

Pemain sering berbagi tips untuk menang http://preman69.tech/# Slot dengan tema film terkenal menarik banyak perhatian

garuda888 slot [url=https://garuda888.top/#]garuda888 slot[/url] Slot dengan grafis 3D sangat mengesankan

Beberapa kasino memiliki area khusus untuk slot https://bonaslot.site/# Slot menjadi bagian penting dari industri kasino

https://preman69.tech/# Kasino di Jakarta memiliki berbagai pilihan permainan

https://casino-fiable.info

Slot dengan grafis 3D sangat mengesankan: slot88.company – slot88.company

Permainan slot mudah dipahami dan menyenangkan http://bonaslot.site/# Banyak pemain menikmati jackpot harian di slot

https://preman69.tech/# Kasino menawarkan pengalaman bermain yang seru

amoxicillin capsules 250mg: where to buy amoxicillin over the counter – amoxicillin price canada

where to buy generic clomid no prescription [url=http://clmhealthpharm.com/#]ClmHealthPharm[/url] can i get clomid online

where can i get doxycycline uk: doxycycline 50mg tablets price – doxycycline cap tab 100mg

amoxicillin generic brand: amoxicillin 1000 mg capsule – how much is amoxicillin

Managing money is easier with Woofi Finance!

amoxicillin 500 mg without prescription: AmoHealthPharm – generic amoxicillin online

Visit spookyswap and click “Connect Wallet.”

https://zithropharm.com/# zithromax 250 price

where to buy cheap clomid without dr prescription: buy generic clomid prices – where to buy generic clomid without prescription

amoxicillin 500 mg purchase without prescription [url=https://amohealthpharm.shop/#]generic amoxicillin cost[/url] generic amoxicillin 500mg

where to buy clomid without dr prescription: ClmHealthPharm – can you buy cheap clomid without dr prescription

https://spookyswap-tm-1.gitbook.io/en-us/

https://clmhealthpharm.com/# cost of generic clomid pills

zithromax 250 price: zithromax 500 mg – how much is zithromax 250 mg

order amoxicillin online uk: Amo Health Pharm – amoxicillin 875 mg tablet

http://zithropharm.com/# order zithromax without prescription

get cheap clomid without a prescription [url=https://clmhealthpharm.com/#]can i order generic clomid pill[/url] cost of clomid pill

doxycycline tablets cost: doxycycline 100 mg capsule price – doxycycline 40 mg capsule

can i buy generic clomid online: ClmHealthPharm – where can i get clomid pill

buy doxycycline united states: Dox Health Pharm – cost of doxycycline 50 mg

https://amohealthpharm.com/# antibiotic amoxicillin

https://spookyswap-2.gitbook.io/en-us

Trust is earned, and spooky swap has definitely earned mine.

https://r-guide-spookyswap-r.gitbook.io/en-us

order doxycycline online uk: Dox Health Pharm – doxycycline 60 mg

zithromax 500 without prescription [url=http://zithropharm.com/#]can you buy zithromax over the counter[/url] zithromax 500 mg lowest price online

http://amohealthpharm.com/# amoxicillin 500 mg brand name

cheapest doxycycline uk: Dox Health Pharm – doxycycline 75 mg

zithromax for sale online: Zithro Pharm – can you buy zithromax over the counter in canada

get cheap clomid online: can i order cheap clomid for sale – buying generic clomid tablets

http://zithropharm.com/# zithromax canadian pharmacy

doxycycline 200 mg [url=https://doxhealthpharm.shop/#]doxycycline 100 mg pill[/url] doxycycline cream over the counter

doxycycline online canada without prescription: DoxHealthPharm – where to buy doxycycline 100mg

http://clmhealthpharm.com/# order cheap clomid pills

doxycycline canada brand name: purchase doxycycline without prescription – doxycycline canada brand name

best crypto site in 2025

amoxicillin brand name: AmoHealthPharm – generic for amoxicillin

https://zithropharm.shop/# zithromax z-pak

zithromax cost canada [url=https://zithropharm.shop/#]Zithro Pharm[/url] zithromax 250 mg pill

amoxicillin pharmacy price: AmoHealthPharm – amoxicillin 500 mg brand name

doxycycline 100 cost: Dox Health Pharm – doxycycline 100mg capsules cost

can i order generic clomid price: can i order generic clomid now – clomid tablet

where to get generic clomid pill: how can i get cheap clomid without prescription – can you get clomid online

http://amohealthpharm.com/# where can i buy amoxicillin over the counter uk

where can i buy amoxicillin over the counter [url=http://amohealthpharm.com/#]AmoHealthPharm[/url] amoxicillin 800 mg price

amoxicillin 500mg capsule cost: Amo Health Pharm – prescription for amoxicillin

WOOFi Finance: A Comprehensive Guide to One of the Leading DeFi Platforms in 2025, https://sites.google.com/view/woofi–finance/

buy cheap generic zithromax: ZithroPharm – buy zithromax 1000 mg online

WOOFi Finance Trading Guide: How to Trade Crypto in 2025

vente de mГ©dicament en ligne https://tadalafilmeilleurprix.com/# Achat mГ©dicament en ligne fiable

Achat mГ©dicament en ligne fiable: kamagra pas cher – pharmacie en ligne avec ordonnance

pharmacie en ligne france livraison internationale: acheter kamagra site fiable – Pharmacie sans ordonnance

pharmacie en ligne avec ordonnance http://pharmaciemeilleurprix.com/# pharmacies en ligne certifiГ©es

Achat mГ©dicament en ligne fiable: kamagra en ligne – pharmacie en ligne sans ordonnance

https://tadalafilmeilleurprix.com/# Pharmacie en ligne livraison Europe

pharmacie en ligne avec ordonnance

acheter mГ©dicament en ligne sans ordonnance: pharmacie en ligne sans ordonnance – Pharmacie en ligne livraison Europe

trouver un mГ©dicament en pharmacie: pharmacie en ligne pas cher – trouver un mГ©dicament en pharmacie

pharmacie en ligne fiable: Achat mГ©dicament en ligne fiable – pharmacie en ligne france livraison belgique

https://viagrameilleurprix.shop/# Viagra vente libre pays

trouver un mГ©dicament en pharmacie

pharmacie en ligne france livraison internationale [url=http://pharmaciemeilleurprix.com/#]pharmacie en ligne[/url] pharmacie en ligne

pharmacie en ligne france fiable http://kamagrameilleurprix.com/# acheter mГ©dicament en ligne sans ordonnance

how to get cytotec online – cytotec pill canada where can i get cytotec over the counter

trouver un mГ©dicament en pharmacie: pharmacie en ligne france fiable – trouver un mГ©dicament en pharmacie

pharmacie en ligne france livraison belgique: cialis sans ordonnance – Pharmacie sans ordonnance

pharmacie en ligne avec ordonnance https://kamagrameilleurprix.com/# acheter mГ©dicament en ligne sans ordonnance

pharmacie en ligne [url=https://tadalafilmeilleurprix.shop/#]Cialis sans ordonnance 24h[/url] pharmacie en ligne sans ordonnance

pharmacie en ligne livraison europe http://tadalafilmeilleurprix.com/# pharmacie en ligne

pharmacie en ligne pas cher: cialis generique – pharmacie en ligne france livraison internationale

pharmacie en ligne france livraison belgique: cialis prix – pharmacie en ligne france livraison internationale

pharmacie en ligne france livraison internationale: Acheter Cialis – acheter mГ©dicament en ligne sans ordonnance

I cannot thank you enough for the article post.Thanks Again. Great.

pharmacie en ligne fiable [url=https://pharmaciemeilleurprix.com/#]Pharmacies en ligne certifiees[/url] Achat mГ©dicament en ligne fiable

Really informative article. Will read on…

https://viagrameilleurprix.com/# Viagra homme prix en pharmacie

pharmacie en ligne pas cher

Viagra sans ordonnance 24h suisse: Viagra sans ordonnance 24h – Viagra sans ordonnance livraison 48h

pharmacie en ligne pas cher: Tadalafil sans ordonnance en ligne – pharmacie en ligne france fiable

http://kamagrameilleurprix.com/# trouver un mГ©dicament en pharmacie

pharmacie en ligne france livraison internationale

Pharmacie en ligne livraison Europe http://kamagrameilleurprix.com/# acheter mГ©dicament en ligne sans ordonnance

п»їpharmacie en ligne france [url=https://tadalafilmeilleurprix.com/#]cialis generique[/url] Pharmacie sans ordonnance

Achat mГ©dicament en ligne fiable: cialis generique – vente de mГ©dicament en ligne

Viagra gГ©nГ©rique sans ordonnance en pharmacie: acheter du viagra – Prix du Viagra en pharmacie en France

https://kamagrameilleurprix.com/# acheter mГ©dicament en ligne sans ordonnance

trouver un mГ©dicament en pharmacie

acheter mГ©dicament en ligne sans ordonnance https://pharmaciemeilleurprix.shop/# pharmacie en ligne fiable

acheter mГ©dicament en ligne sans ordonnance: Cialis sans ordonnance 24h – п»їpharmacie en ligne france

pharmacie en ligne livraison europe [url=http://kamagrameilleurprix.com/#]kamagra en ligne[/url] vente de mГ©dicament en ligne

pharmacie en ligne france pas cher https://kamagrameilleurprix.com/# pharmacie en ligne fiable

https://viagrameilleurprix.com/# Viagra sans ordonnance livraison 24h

pharmacie en ligne fiable

pharmacie en ligne fiable: Pharmacie Internationale en ligne – pharmacie en ligne livraison europe

https://kamagrameilleurprix.shop/# pharmacie en ligne france livraison belgique

pharmacie en ligne

Viagra pas cher paris: viagra en ligne – Viagra femme sans ordonnance 24h

pharmacie en ligne france livraison belgique: Acheter Cialis – pharmacie en ligne sans ordonnance

pharmacie en ligne france livraison internationale [url=https://kamagrameilleurprix.com/#]kamagra en ligne[/url] trouver un mГ©dicament en pharmacie

Le gГ©nГ©rique de Viagra: viagra en ligne – Viagra vente libre allemagne

pharmacies en ligne certifiГ©es https://pharmaciemeilleurprix.shop/# pharmacies en ligne certifiГ©es

https://tadalafilmeilleurprix.shop/# pharmacie en ligne livraison europe

pharmacie en ligne avec ordonnance

pharmacie en ligne sans ordonnance: kamagra livraison 24h – pharmacie en ligne sans ordonnance

pharmacie en ligne france pas cher http://kamagrameilleurprix.com/# п»їpharmacie en ligne france

https://viagrameilleurprix.com/# Acheter viagra en ligne livraison 24h

Pharmacie sans ordonnance

Viagra sans ordonnance 24h Amazon: Viagra sans ordonnance 24h – Viagra Pfizer sans ordonnance

pharmacie en ligne sans ordonnance [url=http://tadalafilmeilleurprix.com/#]cialis sans ordonnance[/url] pharmacie en ligne pas cher

Pharmacie sans ordonnance: kamagra en ligne – п»їpharmacie en ligne france

Very neat blog post.Really thank you! Great.

pharmacie en ligne france livraison belgique: acheter kamagra site fiable – pharmacie en ligne pas cher

Great, thanks for sharing this article.Really looking forward to read more. Keep writing.

http://tadalafilmeilleurprix.com/# acheter mГ©dicament en ligne sans ordonnance

pharmacie en ligne france livraison belgique

What’s up, yes this article is really fastidious and I have learned lotof things from it on the topic of blogging. thanks.

I think this is a real great blog article.Thanks Again. Want more.

Acheter Sildenafil 100mg sans ordonnance: viagra sans ordonnance – Viagra homme prix en pharmacie

acheter mГ©dicament en ligne sans ordonnance https://viagrameilleurprix.shop/# п»їViagra sans ordonnance 24h

Viagra homme sans ordonnance belgique: Acheter Viagra Cialis sans ordonnance – Viagra sans ordonnance 24h Amazon

vente de mГ©dicament en ligne https://viagrameilleurprix.shop/# Viagra homme prix en pharmacie sans ordonnance

pharmacie en ligne: kamagra gel – Pharmacie sans ordonnance

https://pharmaciemeilleurprix.shop/# pharmacie en ligne avec ordonnance

pharmacie en ligne pas cher

Pharmacie en ligne livraison Europe: pharmacie en ligne sans ordonnance – pharmacie en ligne livraison europe

Hey, thanks for the article.Really thank you! Fantastic.

pharmacie en ligne france livraison internationale [url=https://kamagrameilleurprix.shop/#]kamagra oral jelly[/url] vente de mГ©dicament en ligne

pharmacie en ligne avec ordonnance https://tadalafilmeilleurprix.shop/# pharmacie en ligne france livraison belgique

Viagra vente libre allemagne: Viagra pharmacie – Viagra sans ordonnance livraison 24h

https://kamagrameilleurprix.com/# pharmacie en ligne

п»їpharmacie en ligne france

Viagra homme prix en pharmacie sans ordonnance: acheter du viagra – Viagra pas cher livraison rapide france

Pharmacie en ligne livraison Europe https://kamagrameilleurprix.shop/# pharmacies en ligne certifiГ©es

pharmacie en ligne pas cher: kamagra en ligne – acheter mГ©dicament en ligne sans ordonnance

pharmacie en ligne fiable [url=https://kamagrameilleurprix.shop/#]kamagra en ligne[/url] Pharmacie sans ordonnance

https://kamagrameilleurprix.com/# pharmacie en ligne france pas cher

Achat mГ©dicament en ligne fiable

pharmacie en ligne france pas cher: achat kamagra – vente de mГ©dicament en ligne

pharmacie en ligne france livraison belgique: kamagra oral jelly – pharmacie en ligne france livraison internationale

pharmacie en ligne fiable: pharmacie en ligne france pas cher – п»їpharmacie en ligne france

pharmacie en ligne https://tadalafilmeilleurprix.com/# pharmacie en ligne france fiable

SildГ©nafil Teva 100 mg acheter: viagra sans ordonnance – Viagra pas cher paris

https://tadalafilmeilleurprix.com/# п»їpharmacie en ligne france

Pharmacie en ligne livraison Europe

п»їpharmacie en ligne france http://kamagrameilleurprix.com/# pharmacie en ligne france livraison internationale

pharmacie en ligne avec ordonnance: п»їpharmacie en ligne france – acheter mГ©dicament en ligne sans ordonnance

three months, or a year, and the there is the God of War.

п»їpharmacie en ligne france https://tadalafilmeilleurprix.shop/# pharmacie en ligne sans ordonnance

https://tadalafilmeilleurprix.com/# п»їpharmacie en ligne france

pharmacie en ligne

п»їpharmacie en ligne france [url=https://kamagrameilleurprix.com/#]achat kamagra[/url] pharmacie en ligne sans ordonnance

pharmacie en ligne france fiable: achat kamagra – vente de mГ©dicament en ligne

pharmacie en ligne pas cher https://tadalafilmeilleurprix.shop/# Achat mГ©dicament en ligne fiable

https://viagrameilleurprix.shop/# Viagra 100 mg sans ordonnance

pharmacie en ligne france livraison internationale

acheter mГ©dicament en ligne sans ordonnance: Tadalafil sans ordonnance en ligne – acheter mГ©dicament en ligne sans ordonnance

pharmacie en ligne livraison europe https://viagrameilleurprix.com/# Viagra homme prix en pharmacie sans ordonnance

п»їpharmacie en ligne france: Cialis sans ordonnance 24h – trouver un mГ©dicament en pharmacie

Pharmacie Internationale en ligne [url=https://pharmaciemeilleurprix.com/#]п»їpharmacie en ligne france[/url] pharmacie en ligne sans ordonnance

Viagra gГ©nГ©rique sans ordonnance en pharmacie: viagra en ligne – Viagra vente libre pays

acheter mГ©dicament en ligne sans ordonnance: kamagra gel – pharmacie en ligne france livraison belgique

https://tadalafilmeilleurprix.shop/# pharmacie en ligne pas cher

pharmacies en ligne certifiГ©es

Pharmacie Internationale en ligne [url=https://kamagrameilleurprix.shop/#]kamagra pas cher[/url] pharmacie en ligne fiable

pharmacie en ligne pas cher http://kamagrameilleurprix.com/# pharmacies en ligne certifiГ©es

Viagra pas cher livraison rapide france: Acheter Viagra Cialis sans ordonnance – Viagra vente libre pays

Stargate Bridge is the best way to move assets across blockchains in 2025. Instant transfers and low fees make it a must-have for DeFi traders!

Move assets seamlessly between blockchains with Stargate Bridge. Fast, secure, and cost-effective!

http://pharmaciemeilleurprix.com/# Pharmacie sans ordonnance

Pharmacie Internationale en ligne

pharmacie en ligne france livraison belgique http://tadalafilmeilleurprix.com/# pharmacie en ligne fiable

Very neat blog post.Thanks Again. Much obliged.

Viagra pas cher livraison rapide france: Viagra sans ordonnance 24h – Viagra homme sans ordonnance belgique

trouver un mГ©dicament en pharmacie [url=http://kamagrameilleurprix.com/#]kamagra livraison 24h[/url] pharmacie en ligne livraison europe

http://tadalafilmeilleurprix.com/# Pharmacie sans ordonnance

Pharmacie sans ordonnance

acheter mГ©dicament en ligne sans ordonnance http://viagrameilleurprix.com/# SildГ©nafil 100 mg prix en pharmacie en France

pharmacies en ligne certifiГ©es: pharmacie en ligne – pharmacie en ligne

pharmacie en ligne france fiable https://pharmaciemeilleurprix.shop/# Achat mГ©dicament en ligne fiable

http://viagrameilleurprix.com/# Viagra homme sans ordonnance belgique

Pharmacie Internationale en ligne

Viagra pas cher livraison rapide france: viagra en ligne – Viagra vente libre pays

pharmacie en ligne avec ordonnance [url=https://pharmaciemeilleurprix.com/#]pharmacie en ligne pas cher[/url] vente de mГ©dicament en ligne

Pharmacie en ligne livraison Europe https://tadalafilmeilleurprix.shop/# acheter mГ©dicament en ligne sans ordonnance

https://tadalafilmeilleurprix.com/# acheter mГ©dicament en ligne sans ordonnance

Pharmacie en ligne livraison Europe

Achat mГ©dicament en ligne fiable https://tadalafilmeilleurprix.com/# pharmacie en ligne pas cher

http://kamagrameilleurprix.com/# pharmacies en ligne certifiГ©es

п»їpharmacie en ligne france

pharmacie en ligne sans ordonnance: Cialis sans ordonnance 24h – pharmacie en ligne france livraison belgique

Pharmacie en ligne livraison Europe https://kamagrameilleurprix.com/# trouver un mГ©dicament en pharmacie

http://kamagrameilleurprix.com/# Achat mГ©dicament en ligne fiable

Pharmacie en ligne livraison Europe

Looking forward to reading more. Great article post.Much thanks again. Really Cool.

pharmacie en ligne france fiable https://pharmaciemeilleurprix.shop/# pharmacie en ligne

pharmacie en ligne france pas cher [url=https://kamagrameilleurprix.com/#]acheter kamagra site fiable[/url] pharmacie en ligne france livraison belgique

http://plinkodeutsch.com/# plinko germany

plinko geld verdienen: plinko ball – plinko casino

A round of applause for your article post.Really looking forward to read more. Fantastic.

PlinkoFr: plinko fr – PlinkoFr

https://plinkocasi.com/# Plinko casino game

https://plinkocasinonl.com/# plinko nl

I’ve personally used Stargate Bridge for transactions, and I can say it’s one of the most reliable bridges in the crypto space!

plinko ball: plinko – avis plinko

plinko ball [url=https://plinkodeutsch.com/#]PlinkoDeutsch[/url] Plinko Deutsch

Plinko game: Plinko app – Plinko game for real money

https://plinkocasinonl.com/# plinko nederland

plinko: plinko betrouwbaar – plinko nederland

pinco.legal: pinco – pinco slot

plinko germany: plinko ball – plinko geld verdienen

Thanks so much for the article post.Thanks Again. Will read on…

plinko spelen: plinko – plinko betrouwbaar

plinko germany: plinko – PlinkoDeutsch

Great article post.Much thanks again. Great.

https://plinkofr.com/# plinko france

Plinko online game: Plinko casino game – Plinko games

plinko france: plinko – plinko france

https://plinkocasi.com/# Plinko game

http://pinco.legal/# pinco slot

plinko ball: Plinko Deutsch – plinko ball

https://plinkofr.com/# plinko france

http://plinkocasinonl.com/# plinko nl

plinko nl: plinko spelen – plinko nl

pinco casino [url=http://pinco.legal/#]pinco legal[/url] pinco slot

Plinko game for real money: Plinko online – Plinko game for real money

http://plinkodeutsch.com/# plinko erfahrung

http://plinkodeutsch.com/# plinko ball

Plinko casino game: Plinko app – Plinko-game

https://plinkodeutsch.shop/# plinko geld verdienen

plinko erfahrung: plinko wahrscheinlichkeit – plinko ball

plinko casino nederland: plinko nl – plinko spelen

Plinko game for real money [url=https://plinkocasi.com/#]Plinko games[/url] Plinko online game

https://plinkofr.shop/# plinko

plinko spelen: plinko betrouwbaar – plinko casino

plinko game: plinko game – plinko ball

https://plinkocasi.com/# Plinko games

https://plinkofr.shop/# plinko argent reel avis

https://plinkocasinonl.shop/# plinko spelen

Plinko casino game: Plinko app – Plinko online

https://plinkocasinonl.com/# plinko spelen

plinko: plinko casino – plinko betrouwbaar

https://plinkocasinonl.com/# plinko spelen

plinko game [url=https://plinkodeutsch.com/#]plinko casino[/url] plinko erfahrung

http://plinkocasi.com/# Plinko online game

A round of applause for your article.Much thanks again. Cool.

https://plinkocasinonl.shop/# plinko

Appreciate you sharing, great blog post.Thanks Again. Really Great.

Plinko online game: Plinko games – Plinko online game

pinco: pinco legal – pinco casino

plinko fr [url=http://plinkofr.com/#]PlinkoFr[/url] plinko casino

pinco.legal: pinco – pinco.legal

http://plinkodeutsch.com/# plinko game

pinco casino: pinco legal – pinco legal

plinko casino: plinko geld verdienen – plinko erfahrung

Plinko games: Plinko casino game – Plinko game

Plinko-game [url=https://plinkocasi.com/#]Plinko casino game[/url] Plinko game for real money

https://certpharm.com/# mexican online pharmacies prescription drugs

Mexican Cert Pharm: Best Mexican pharmacy online – best online pharmacies in mexico

mexican drugstore online https://certpharm.shop/# Cert Pharm

mexican pharmacy online: Mexican Cert Pharm – Legit online Mexican pharmacy

mexican pharmacy online: mexican pharmacy online – mexican pharmacy online

medicine in mexico pharmacies [url=https://certpharm.shop/#]Legit online Mexican pharmacy[/url] mexican pharmacy online

medication from mexico pharmacy https://certpharm.com/# Mexican Cert Pharm

buying prescription drugs in mexico: mexican pharmacy – Cert Pharm

Best Mexican pharmacy online: Cert Pharm – mexican drugstore online

https://certpharm.com/# Legit online Mexican pharmacy

mexican border pharmacies shipping to usa [url=https://certpharm.shop/#]mexican pharmacy[/url] reputable mexican pharmacies online

mexican pharmaceuticals online https://certpharm.com/# buying prescription drugs in mexico

mexican pharmacy: mexican pharmacy – mexican pharmacy online

mexican mail order pharmacies: Cert Pharm – mexican pharmacy

Mexican Cert Pharm [url=http://certpharm.com/#]mexican pharmacy[/url] Mexican Cert Pharm

https://certpharm.shop/# Mexican Cert Pharm

mexican pharmacy: Cert Pharm – Cert Pharm

mexican border pharmacies shipping to usa https://certpharm.shop/# Best Mexican pharmacy online

https://certpharm.shop/# Mexican Cert Pharm

Major thankies for the blog.Much thanks again. Really Great.

Mexican Cert Pharm [url=http://certpharm.com/#]Mexican Cert Pharm[/url] mexican pharmacy

mexican pharmacy: Mexican Cert Pharm – Cert Pharm

mexico drug stores pharmacies http://certpharm.com/# mexican pharmacy

https://certpharm.com/# buying prescription drugs in mexico

п»їbest mexican online pharmacies: mexican pharmacy – Mexican Cert Pharm

mexican border pharmacies shipping to usa https://certpharm.com/# Legit online Mexican pharmacy

https://certpharm.com/# Mexican Cert Pharm

mexican pharmacy: Legit online Mexican pharmacy – mexican pharmacy

Best Mexican pharmacy online [url=https://certpharm.com/#]Best Mexican pharmacy online[/url] best online pharmacies in mexico

п»їbest mexican online pharmacies http://certpharm.com/# mexican pharmacy

Express Canada Pharm: pharmacy wholesalers canada – Express Canada Pharm

https://expresscanadapharm.shop/# Express Canada Pharm

safe reliable canadian pharmacy: Express Canada Pharm – Express Canada Pharm

https://expresscanadapharm.com/# Express Canada Pharm

Express Canada Pharm: canadian pharmacy ratings – canadian pharmacy 24h com

Express Canada Pharm: drugs from canada – Express Canada Pharm

vipps canadian pharmacy [url=http://expresscanadapharm.com/#]Express Canada Pharm[/url] Express Canada Pharm

best canadian pharmacy to buy from: Express Canada Pharm – Express Canada Pharm

Express Canada Pharm: Express Canada Pharm – best canadian pharmacy

Express Canada Pharm: northwest canadian pharmacy – canadian pharmacy 365

reputable canadian pharmacy: canada ed drugs – Express Canada Pharm

77 canadian pharmacy [url=http://expresscanadapharm.com/#]Express Canada Pharm[/url] Express Canada Pharm

canadian world pharmacy: Express Canada Pharm – Express Canada Pharm

http://expresscanadapharm.com/# canada ed drugs

canada pharmacy online legit [url=https://expresscanadapharm.shop/#]canadian pharmacy no scripts[/url] best canadian pharmacy

Thank you ever so for you article.Much thanks again. Much obliged.

Express Canada Pharm: Express Canada Pharm – Express Canada Pharm

https://expresscanadapharm.com/# Express Canada Pharm

Looking forward to reading more. Great blog post.Thanks Again. Want more.

Long-Term Effects.

where to buy cheap cipro online

The free blood pressure check is a nice touch.

A pharmacy that feels like family.

https://clomidpharm24.top/

Global expertise that’s palpable with every service.

A true gem in the international pharmacy sector.

can i order cytotec pill

Their health and beauty section is fantastic.

World-class service at every touchpoint.

clomid buy

They have a great selection of wellness products.

They bridge the gap between countries with their service.

https://cipropharm24.top/

Their medication synchronization service is fantastic.

I appreciate the range of payment options they offer.

cost clomid without prescription

Their digital prescription service is innovative and efficient.

The one-stop solution for all international medication requirements.

[url=https://lisinoprilpharm24.top/#]can i buy lisinopril without insurance[/url]|[url=https://clomidpharm24.top/#]where to buy clomid tablets[/url]|[url=https://cytotecpharm24.top/#]where can i get cytotec pills[/url]|[url=https://gabapentinpharm24.top/#]gabapentin another name[/url]|[url=https://cipropharm24.top/#]how to get cheap cipro without prescription[/url]

Always professional, whether dealing domestically or internationally.

Their medication synchronization service is fantastic.

https://lisinoprilpharm24.top/

Quick service without compromising on quality.

I love the convenient location of this pharmacy.

can you buy cheap clomid without insurance

Impressed with their wide range of international medications.

A true gem in the international pharmacy sector.

can you get cheap lisinopril without rx

A name synonymous with international pharmaceutical trust.

They offer invaluable advice on health maintenance.

https://clomidpharm24.top/

Read information now.

A reliable pharmacy that connects patients globally.

cheap price for gabapentin 600 mg

Some trends of drugs.

All trends of medicament.

where buy generic cytotec tablets

They provide valuable advice on international drug interactions.

Their international team is incredibly knowledgeable.

can i buy cipro without dr prescription

Trustworthy and reliable, every single visit.

The widest range of international brands under one roof.

can i get cheap cipro pills

Outstanding service, no matter where you’re located.

They provide peace of mind with their secure international deliveries.

where can i buy clomid without a prescription

Every pharmacist here is a true professional.

They set the tone for international pharmaceutical excellence.

https://cipropharm24.top/

Impressed with their dedication to international patient care.

I do not even understand how I ended up here, however I assumed this putup was good. I do not know who you’re but certainly you are going to a well-known blogger if you happen to are not already.Cheers!

Their loyalty points system offers great savings.

order cheap cipro online

The widest range of international brands under one roof.

Get here.

[url=https://lisinoprilpharm24.top/#]buy generic lisinopril pills[/url]|[url=https://clomidpharm24.top/#]can you buy clomid pills[/url]|[url=https://cytotecpharm24.top/#]cytotec side[/url]|[url=https://gabapentinpharm24.top/#]flexeril interaction with gabapentin[/url]|[url=https://cipropharm24.top/#]buy generic cipro without a prescription[/url]

Their pharmacists are top-notch; highly trained and personable.

A reliable pharmacy in times of emergencies.

where to buy cheap lisinopril prices

The gold standard for international pharmaceutical services.

They source globally to provide the best care locally.

https://gabapentinpharm24.top/

The staff exudes professionalism and care.

A beacon of reliability and trust.

what is shelf life of gabapentin

They’re globally connected, ensuring the best patient care.

A trusted voice in global health matters.

https://cytotecpharm24.top/

A name synonymous with international pharmaceutical trust.

A true gem in the international pharmacy sector.

can i buy cheap lisinopril price

A pharmacy that keeps up with the times.

They always have the newest products on the market.

[url=https://lisinoprilpharm24.top/#]order generic lisinopril without dr prescription[/url]|[url=https://clomidpharm24.top/#]buy generic clomid pill[/url]|[url=https://cytotecpharm24.top/#]order generic cytotec tablets[/url]|[url=https://gabapentinpharm24.top/#]gabapentin class of drugs[/url]|[url=https://cipropharm24.top/#]how to buy cheap cipro[/url]

What side effects can this medication cause?

Their global perspective enriches local patient care.

https://cytotecpharm24.top/

A stalwart in international pharmacy services.

Everything information about medication.

lisinopril medication purpose

Offering a global gateway to superior medications.

They bridge the gap between countries with their service.

[url=https://lisinoprilpharm24.top/#]lisinopril 20mg[/url]|[url=https://clomidpharm24.top/#]where buy clomid pills[/url]|[url=https://cytotecpharm24.top/#]can i purchase generic cytotec pills[/url]|[url=https://gabapentinpharm24.top/#]gabapentin postoperative pain[/url]|[url=https://cipropharm24.top/#]can you get generic cipro tablets[/url]

The gold standard for international pharmaceutical services.

Their loyalty program offers great deals.

https://cytotecpharm24.top/

Their compounding services are impeccable.

Their international partnerships enhance patient care.

buying generic cytotec without prescription

They bridge global healthcare gaps seamlessly.

All trends of medicament.

can i order generic cipro prices

This pharmacy has a wonderful community feel.

Their global medical liaisons ensure top-quality care.

[url=https://lisinoprilpharm24.top/#]how to get cheap lisinopril online[/url]|[url=https://clomidpharm24.top/#]can i order clomid without a prescription[/url]|[url=https://cytotecpharm24.top/#]cost cheap cytotec no prescription[/url]|[url=https://gabapentinpharm24.top/#]sudden stop of gabapentin[/url]|[url=https://cipropharm24.top/#]can i get cipro[/url]

I value their commitment to customer health.

A universal solution for all pharmaceutical needs.

can i order generic cytotec

Always greeted with warmth and professionalism.

I always find great deals in their monthly promotions.

where can i buy cipro online

Their prices are unbeatable!

Consistently excellent, year after year.

https://gabapentinpharm24.top/

A harmonious blend of local care and global expertise.

Always a step ahead in international healthcare trends.

order cipro pill

They have a fantastic range of supplements.

The one-stop solution for all international medication requirements.

where to buy clomid price

A name synonymous with international pharmaceutical trust.

Their global outlook is evident in their expansive services.

https://clomidpharm24.top/

A beacon of reliability and trust.

The best in town, without a doubt.

[url=https://lisinoprilpharm24.top/#]buying lisinopril no prescription[/url]|[url=https://clomidpharm24.top/#]can i purchase clomid tablets[/url]|[url=https://cytotecpharm24.top/#]cytotec generic and brand name[/url]|[url=https://gabapentinpharm24.top/#]gabapentin leukocytosis[/url]|[url=https://cipropharm24.top/#]where to buy cipro without dr prescription[/url]

Their 24/7 support line is super helpful.

I love the convenient location of this pharmacy.

how to buy cheap cipro prices

Their global approach ensures unparalleled care.

They’re reshaping international pharmaceutical care.

get generic clomid price

Get here.

Quick service without compromising on quality.

https://lisinoprilpharm24.top/

Their global pharmacists’ network is commendable.

Efficient, reliable, and internationally acclaimed.

how to buy cheap cipro pills

They have an impressive roster of international certifications.

Their health and beauty section is fantastic.

buy cytotec pill

What side effects can this medication cause?

Their staff is so knowledgeable and friendly.

https://cipropharm24.top/

Professional, courteous, and attentive – every time.

Their international team is incredibly knowledgeable.

[url=https://lisinoprilpharm24.top/#]buy lisinopril without prescription[/url]|[url=https://clomidpharm24.top/#]get generic clomid without dr prescription[/url]|[url=https://cytotecpharm24.top/#]where can i buy cheap cytotec without rx[/url]|[url=https://gabapentinpharm24.top/#]side effects of medication gabapentin[/url]|[url=https://cipropharm24.top/#]can i purchase cipro for sale[/url]

Their senior citizen discounts are much appreciated.

Their international partnerships enhance patient care.

gabapentin 400 mg beipackzettel

Their digital prescription service is innovative and efficient.

Their global perspective enriches local patient care.

where to buy generic clomid without insurance

They keep a broad spectrum of rare medications.

Read information now.

lisinopril 40 mg generic

Efficient, effective, and always eager to assist.

Always a seamless experience, whether ordering domestically or internationally.